Highlights

YouTube Channel

Lighthearted bite-sized ML videos for your AI Coffee Break! 📺 Mostly videos about the latest technical advancements in AI, such as large language models (LLMs), text-to-image models and everything cool in natural language processing, computer vision, etc.!

Reviewing

ACL 2025 Area Chair

ICLR, Monthly *ACL Rolling Review (ARR), EACL, EMNLP, NAACL, ACL, CVPR, ACMMM, EurNLP

Workshops: MULA, RepL4NLP, LIMO

ACL2021 (Outstanding Reviewer)

Honors

DAAD Vollstipendium Für Absolventen Deutscher Auslandschulen

Full scholarship to study the subject of my choice in Germany after graduating high school in Romania

Young Researcher at the Heidelberg Laureate Forum 2022

Recipient of the ABBE GRANT promoted by the Carl-Zeiss Stiftung

Nominated for the GI Dissertationspreis 2024, Dagstuhl

Nominated by Heidelberg University for my PhD thesis

Publications

Visit my Google Scholar page for a complete list. Selection:

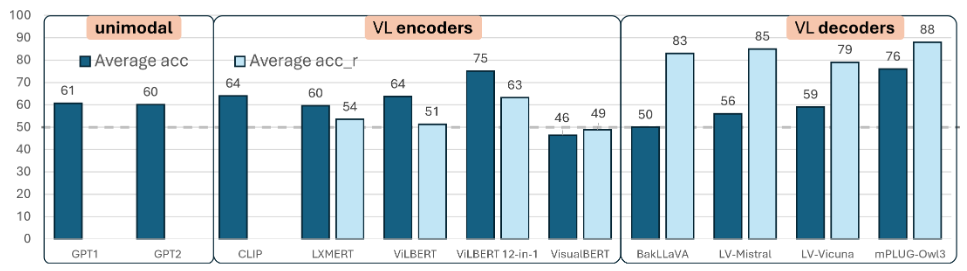

Do Vision & Language Decoders use Images and Text equally? How Self-consistent are their Explanations?

Parcalabescu, L. and Frank, A., 2025., The Twelfth International Conference on Learning Representations (ICLR)

Vision and language models (VLMs) are currently the most generally performant architectures on multimodal tasks. Next to their predictions, they can also produce explanations, either in post-hoc or CoT settings. However, it is not clear how much they use the vision and text modalities when generating predictions or explanations. In this work, we investigate if VLMs rely on modalities differently when generating explanations as opposed to when they provide answers. We also evaluate the self-consistency of VLM decoders in both post-hoc and CoT explanation settings, by extending existing tests and measures to VLM decoders. We find that VLMs are less self-consistent than LLMs. The text contributions in VL decoders are much larger than the image contributions across all measured tasks. And the contributions of the image are significantly larger for explanation generations than for answer generation. This difference is even larger in CoT compared to the post-hoc explanation setting. We also provide an up-to-date benchmarking of state-of-the-art VL decoders on the VALSE benchmark, which to date focused only on VL encoders. We find that VL decoders are still struggling with most phenomena tested by VALSE.

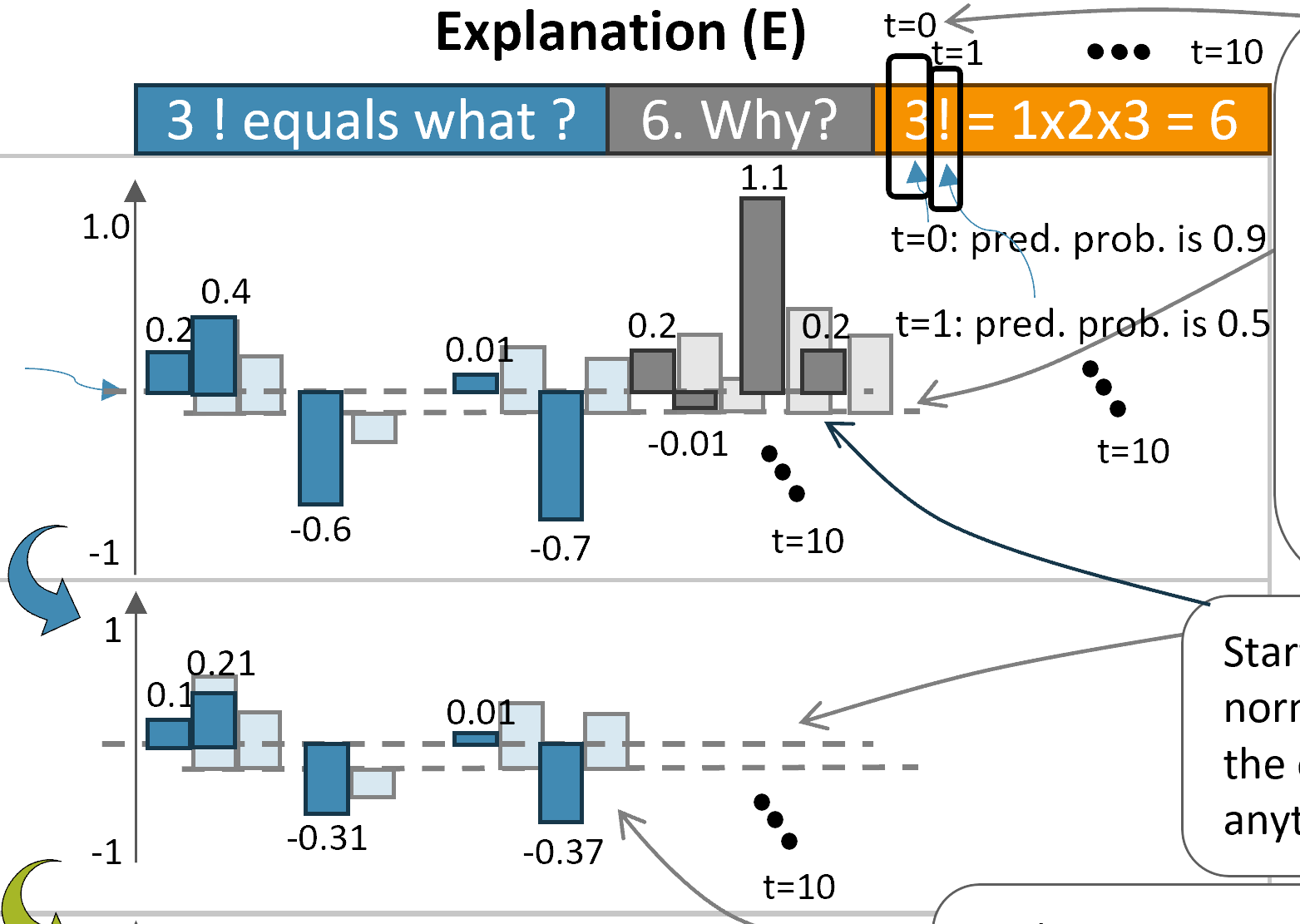

Link to the paperOn Measuring Faithfulness or Self-Consistency of Natural Language Explanations

Parcalabescu, L. and Frank, A., 2024., Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL 2024)

Large language models (LLMs) can explain their predictions through post-hoc or Chain-of-Thought (CoT) explanations. But an LLM could make up reasonably sounding explanations that are unfaithful to its underlying reasoning. Recent work has designed tests that aim to judge the faithfulness of post-hoc or CoT explanations. In this work we argue that these faithfulness tests do not measure faithfulness to the models’ inner workings-but rather their self-consistency at output level. Our contributions are three-fold: i) We clarify the status of faithfulness tests in view of model explainability, characterising them as self-consistency tests instead. This assessment we underline by ii) constructing a Comparative Consistency Bank for self-consistency tests that for the first time compares existing tests on a common suite of 11 open LLMs and 5 tasks-including iii) our new self-consistency measure CC-SHAP. CC-SHAP is a fine-grained measure (not a test) of LLM self-consistency. It compares how a model’s input contributes to the predicted answer and to generating the explanation. Our fine-grained CC-SHAP metric allows us iii) to compare LLM behaviour when making predictions and to analyse the effect of other consistency tests at a deeper level, which takes us one step further towards measuring faithfulness by bringing us closer to the internals of the model than strictly surface output-oriented tests.

Link to the paperViLMA: A Zero-Shot Benchmark for Linguistic and Temporal Grounding in Video-Language Models

Kesen, I., Pedrotti, A., Dogan, M., Cafagna, M., Acikgoz, E.C., Parcalabescu, L., Calixto, I., Frank, A., Gatt, A., Erdem, A. and Erdem, E., 2023. The Twelfth International Conference on Learning Representations (ICLR)

With the ever-increasing popularity of pretrained Video-Language Models (VidLMs), there is a pressing need to develop robust evaluation methodologies that delve deeper into their visio-linguistic capabilities. To address this challenge, we present ViLMA (Video Language Model Assessment), a task-agnostic benchmark that places the assessment of fine-grained capabilities of these models on a firm footing. Task-based evaluations, while valuable, fail to capture the complexities and specific temporal aspects of moving images that VidLMs need to process. Through carefully curated counterfactuals, ViLMA offers a controlled evaluation suite that sheds light on the true potential of these models, as well as their performance gaps compared to human-level understanding. ViLMA also includes proficiency tests, which assess basic capabilities deemed essential to solving the main counterfactual tests. We show that current VidLMs' grounding abilities are no better than those of vision-language models which use static images. This is especially striking once the performance on proficiency tests is factored in. Our benchmark serves as a catalyst for future research on VidLMs, helping to highlight areas that still need to be explored.

Link to the paperMM-SHAP: A Performance-agnostic Metric for Measuring Multimodal Contributions in Vision and Language Models & Tasks

Parcalabescu, L. and Frank, A., ACL 2023, In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 4032-4059, Toronto, Canada. Association for Computational Linguistics.

Vision and language models (VL) are known to exploit unrobust indicators in individual modalities (e.g., introduced by distributional biases) instead of focusing on relevant information in each modality. That a unimodal model achieves similar accuracy on a VL task to a multimodal one, indicates that so-called unimodal collapse occurred. However, accuracy-based tests fail to detect e.g., when the model prediction is wrong, while the model used relevant information from a modality. Instead, we propose MM-SHAP, a performance-agnostic multimodality score based on Shapley values that reliably quantifies in which proportions a multimodal model uses individual modalities. We apply MM-SHAP in two ways: (1) to compare models for their average degree of multimodality, and (2) to measure for individual models the contribution of individual modalities for different tasks and datasets. Experiments with six VL models -- LXMERT, CLIP and four ALBEF variants -- on four VL tasks highlight that unimodal collapse can occur to different degrees and in different directions, contradicting the wide-spread assumption that unimodal collapse is one-sided. Based on our results, we recommend MM-SHAP for analysing multimodal tasks, to diagnose and guide progress towards multimodal integration. Code available at https://github.com/Heidelberg-NLP/MM-SHAP.

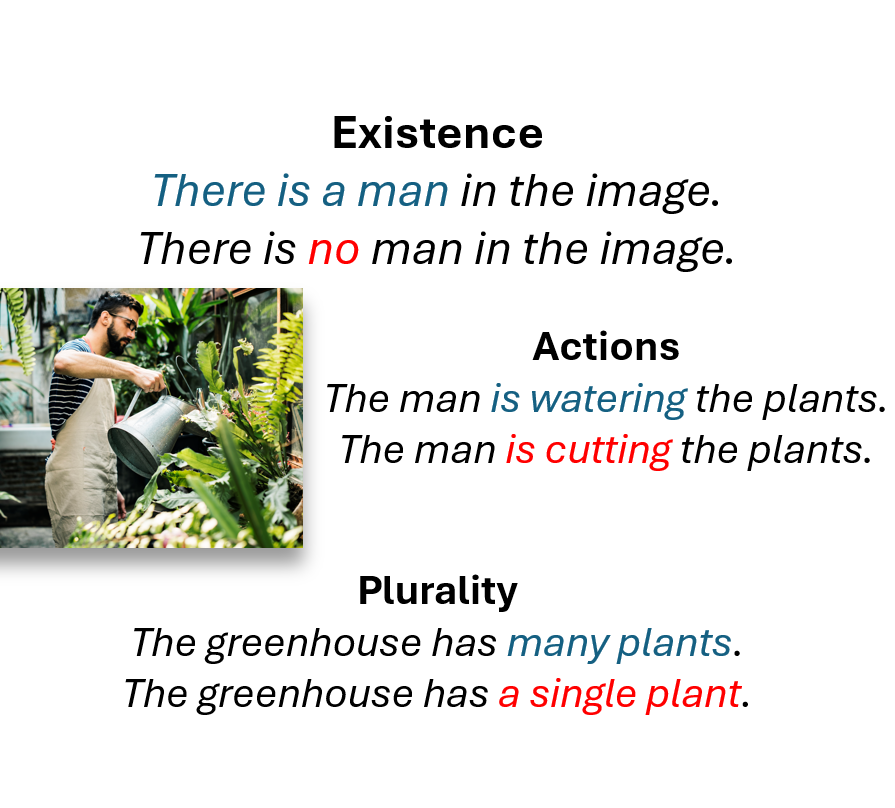

Link to the paperVALSE: A Task-Independent Benchmark for Vision and Language Models Centered on Linguistic Phenomena

Parcalabescu, L., Cafagna, M., Muradjan, L., Frank, A., Calixto, I. and Gatt, A., ACL 2022 In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 8253–8280, Dublin, Ireland. Association for Computational Linguistics.

We propose VALSE (Vision And Language Structured Evaluation), a novel benchmark designed for testing general-purpose pretrained vision and language (V&L) models for their visio-linguistic grounding capabilities on specific linguistic phenomena. VALSE offers a suite of six tests covering various linguistic constructs. Solving these requires models to ground linguistic phenomena in the visual modality, allowing more fine-grained evaluations than hitherto possible. We build VALSE using methods that support the construction of valid foils, and report results from evaluating five widely-used V&L models. Our experiments suggest that current models have considerable difficulty addressing most phenomena. Hence, we expect VALSE to serve as an important benchmark to measure future progress of pretrained V&L models from a linguistic perspective, complementing the canonical task-centred V&L evaluations.

Link to the paperScientific Talks

- Keynote at the Heidelberg Postdoc Symposium hosted by the dkfz (2025)

- Keynote at the National Conference on AI Transformations: Language, Technology, and Society, Utrecht (2025)

- Invited talk at the University of Sheffield NLP Group (2024)

- Invited talk at Datafest Yerevan Conference (2024)

- Invited talk at Aleph Alpha - Heidelberg (2024)

- Invited talk at cogsys-group, CLASP, Gothenburg, Sweden (2024)

- Invited to talk about on work at heidelberg.ai at the DKFZ (2023)

- Invited Talk at the LIMO 2023 Workshop About Vision and Language (VL) models (2023)

- Invited talk about own work at the ICDM Workshop Foundation Models in Vision and Language (2022)

- Invited talk about own work “Multimodal Learning: Integrating Vision and Language” at StuTS 2020

Science Communication Talks

Talks for broader audiences:

- Participated as a science communicator in the first Romanian-language Native Scientists Workshop Heidelberg (2025)

- Panelist at the VOICES festival in Zagreb, Croatia (2025)

- Talk and discussion "Artificial Intelligence: Which skills do I need?" at E-engAGEd organized by EAVI Media Literacy for Citizenship (2025)

- Podcast Interview Deep Learning with Letitia Parcalabescu - Weaviate Podcast #96! (2024)

- Panelist at the VOICES festival in Florence, Italy (2024)

- Invited to talk (in German) about AI for a general audience at ARD MixTalk (2023)

- Podcast (in German) about AI for the Handelsblatt (2023)

- Invited to talk in a panel about Digital Tools & AI in Research at To be honest Conference (2023)

- Panelist on the “Popularization in ML Research” panel at the ML in PL conference (2022)

- “AI for good” at the EAVI Conversations (2021)

- Guest on the Transformative Ideas Podcast

- Guest on the MLST YouTube channel and podcast

- “Why Multi-Modality is the Future of Machine Learning” at the ML Engineered Podcast

Teaching and Supervision

Teaching

Own courses organized independently at Heidelberg University, including lectures, exercises, exam / practical project

-

Methods for Learning without Annotated Data

Master Level Course (in English), every Summer Term from 2020 to 2024 with very good reviews

-

Designing Experiments For Machine Learning

Bachelor Level Course (in German), every Winter Term from 2021 to 2024 with very good reviews

-

Deep Learning Course for Biologists

at the HBIGS graduate school Heidelberg every term since 2023

-

Programming Exam

Summer Term 2020, Winter Term 20/21

-

Resource course

Bachelor Level Course (in German) Summer Term 2020, Winter Term 20/21

-

Integrating Vision and Language: Achievements and Challenges in Multimodal Machine Learning

Master Level Seminar, Winter Term 19/20

Supervision

Co-supervision of theses with Prof. Anette Frank:

- Master theses: Phillip Wiesenbach, Julia Suter

- Bachelor thesis: Lillita Muradjan